Databricks Platform: Key Capabilities and Benefits for Businesses

Delta Lake is an open-source layer needed to store all data and tables in the Databricks platform. This component adds additional reliability and integrity to an existing data lake through A.C.I.D transactions (single units of work):

The challenge is that with such increasing volumes, it becomes more difficult for enterprises to manage and analyze data, especially when dealing with multi-cloud environments. Here is where big data tools like the Databricks Lakehouse Platform come into play.

Experts in all things data, we will dive into the Databricks capabilities and explain what benefits you can drive with this data-fueled platform.

What Is Databricks? A Brief Overview

Databricks is a cloud-based platform used to build, store, process, maintain, clean, share, analyze, model, and monetize enterprise-grade data. Available on multiple clouds — including AWS, Microsoft Azure, and Google Cloud — and their combinations, Databricks integrates the fields of data science, engineering, and business. This facilitates processes from data preparation to machine learning deployment, including:

- Data ingestion

- Processing, scheduling, management

- Data annotation and exploration

- Dashboards generation

- Visualizations

- Security and governance management

- Compute management

- ML modeling and tracking.

By combining a data lake and a data warehouse into the new concept of a data lakehouse, Databricks provides a single data source without unwanted data silos. The platform works with both structured and unstructured data and supports the workloads that are split across various processors — and can be scaled up and down on demand. It creates an effective working environment for the whole data science team, including data engineers, analysts, and ML engineers.

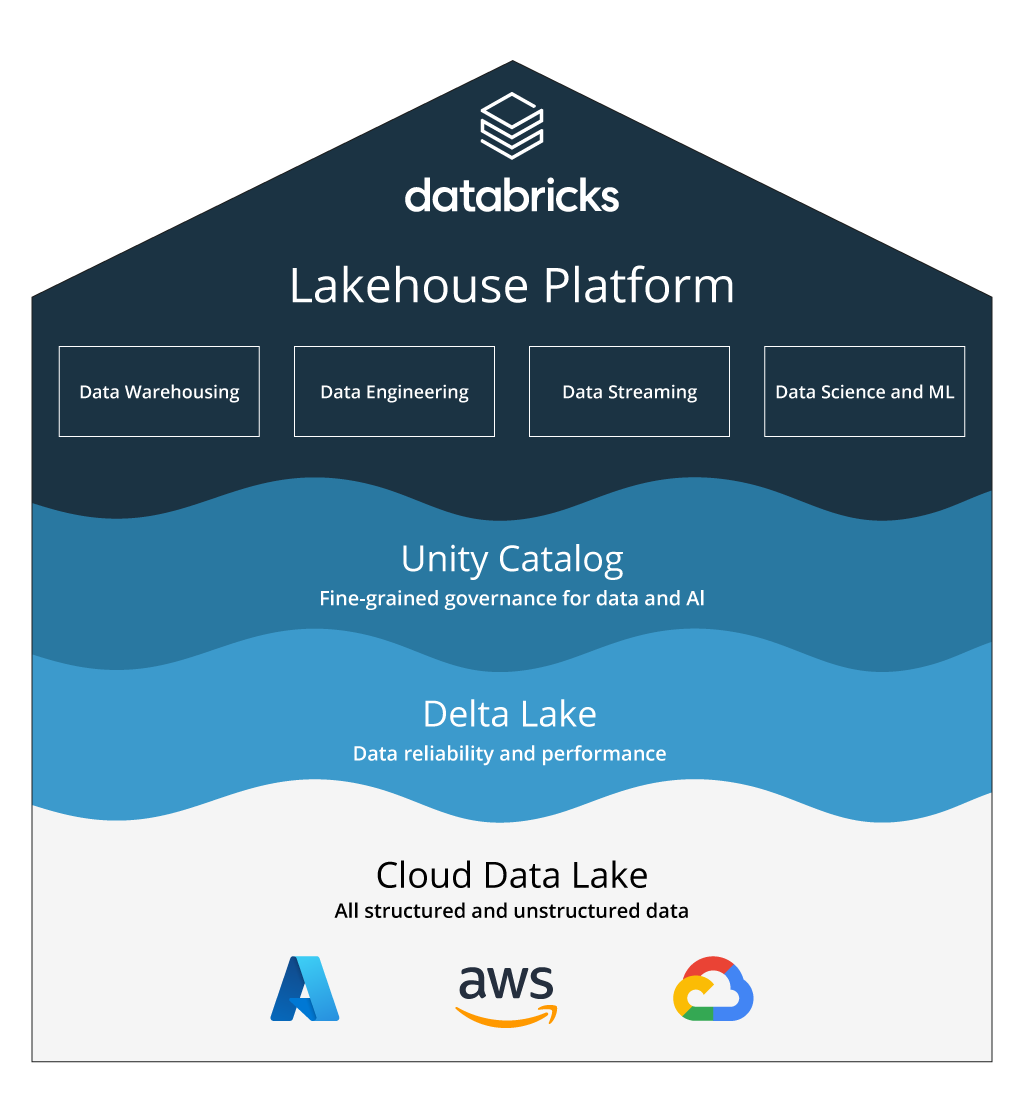

Databricks Architecture: Core Components

At the heart of Databricks are four open source tools — MLFlow, Apache Spark, Koalas, and DeltaLake — that are delivered as-a-service in the cloud. Built to effectively manage huge amounts of data, the platform focuses on ensuring data integrity, high data quality, and top-notch performance. To make it all possible, Databricks leverages Delta Lake and Unity Catalog.

Delta Lake is an open-source layer needed to store all data and tables in the Databricks platform. This component adds additional reliability and integrity to an existing data lake through A.C.I.D transactions (single units of work):

- By treating each statement in a transaction as a single unit, Atomicity prevents data loss and corruption — for example, when a streaming source fails mid-stream.

- Consistency is a property that ensures all the changes are made in a predefined manner, and errors do not lead to unintended consequences in the table integrity.

- Isolation means that concurrent transactions in one table do not interfere with or affect each another.

- Durability makes sure all the changes to your data will be present even if the system fails.

Unity Catalog is responsible for centralized metadata management and data governance for all of Databricks’ data assets, including files, tables, dashboards, notebooks, and machine learning models. Among the key features are:

- Unified management of data access policies

- ANSI SQL-based security model

- Advanced data discovery

- Automated data auditing and lineage.

All these components piece together in a powerful functionality that makes the platform stand out and deliver tangible business value.

Databricks Features and Capabilities

Data Engineering

Databricks leverages Apache Spark to facilitate the ingestion, transformation, and scaling of both streaming and batch data processing use cases while eliminating silos on one platform. ETL (extract, transform, load) and ML workflows are simplified with Delta Live Tables (DLT). This framework automates operational complexities like task orchestration, infrastructure management, performance optimization, and error recovery. With DLT, engineers can deploy scalable pipelines by treating data as code, i.e., implementing software engineering best practices such as testing, monitoring, and documentation.

Among other data engineering capabilities is reliable workflow orchestration via Databricks Workflows, which offers full-fledged observability and monitoring across the entire data and AI lifecycle, a cutting-edge data processing engine, and state-of-the-art data governance.

Data Analytics

For business intelligence specialists, Databricks offers advanced analytics capabilities in the form of Databricks SQL. Serving as an interactive engine, it allows writing and executing SQL queries like in traditional SQL-based systems — to create and share visuals, dashboards, and reports. Smooth integration with popular BI tools like Tableau, Looker, and Power BI allows Databricks SQL to query the most recent and complete data in your data lake for more coherent reports. Moreover, as opposed to traditional data warehouses, Databricks SQL is almost six times faster in submitting workloads to a compute engine thanks to its active server fleet under the hood.

A case in point: Infopulse made the most of Azure services (including Azure SQL, Data Factory, and Analysis Services) to introduce a robust data management solution to an agro-industrial group. The delivered platform helped the client reduce time and efforts in generating custom, self-service BI reports.

Machine Learning

Another module you get when adopting Databricks is its Machine Learning platform. It is an environment for experimentation and innovation around developing, training, deploying, and sharing different ML models. This is possible thanks to its built-in algorithms, hyperparameter tuning tools, feature engineering capabilities, model evaluation functionality, as well as support for popular ML libraries (PyTorch, TensorFlow, Keras, Horovod, XGBoost).

Collaboration

Databricks enables data engineers, data scientists, and ML specialists to work in a collaborative workspace through shared Notebooks, using multiple programming languages (Python, Scala, R, SQL, etc.).

Through these and other powerful capabilities, data engineers can simplify collaboration and improve efficiency:

- Real-time coauthoring empowers users to simultaneously work on the same task while tracking changes in real time.

- Simultaneous commenting enables instant notifications to colleagues within a shared Notebook.

- Automatic versioning specifies changes and versions in your workloads to help you continue where you left off.

- Jobs scheduling allows executing jobs for production pipelines with a specific schedule.

- Permissions management presupposes using a common security model to control access to each particular notebook.

- Automated logs and notifications enable easy monitoring and troubleshooting.

Databricks Benefits for Businesses

Databricks can become the core of the modern data stack in large-scale enterprises. Here are the reasons why.

Increased Scalability

The allure of Databricks is its ability to effectively handle huge amounts of data. Whether you need a single-node driver for a simple data analysis or a multi-node cluster for production-level workloads, Databricks can help you. The platform uncouples storage from computing and delivers virtually infinite scalability, so users always have resources for data cleaning and analysis, feature engineering, model training, and other time- and resources-consuming operations. The result is considerable time saving and increased efficiency.

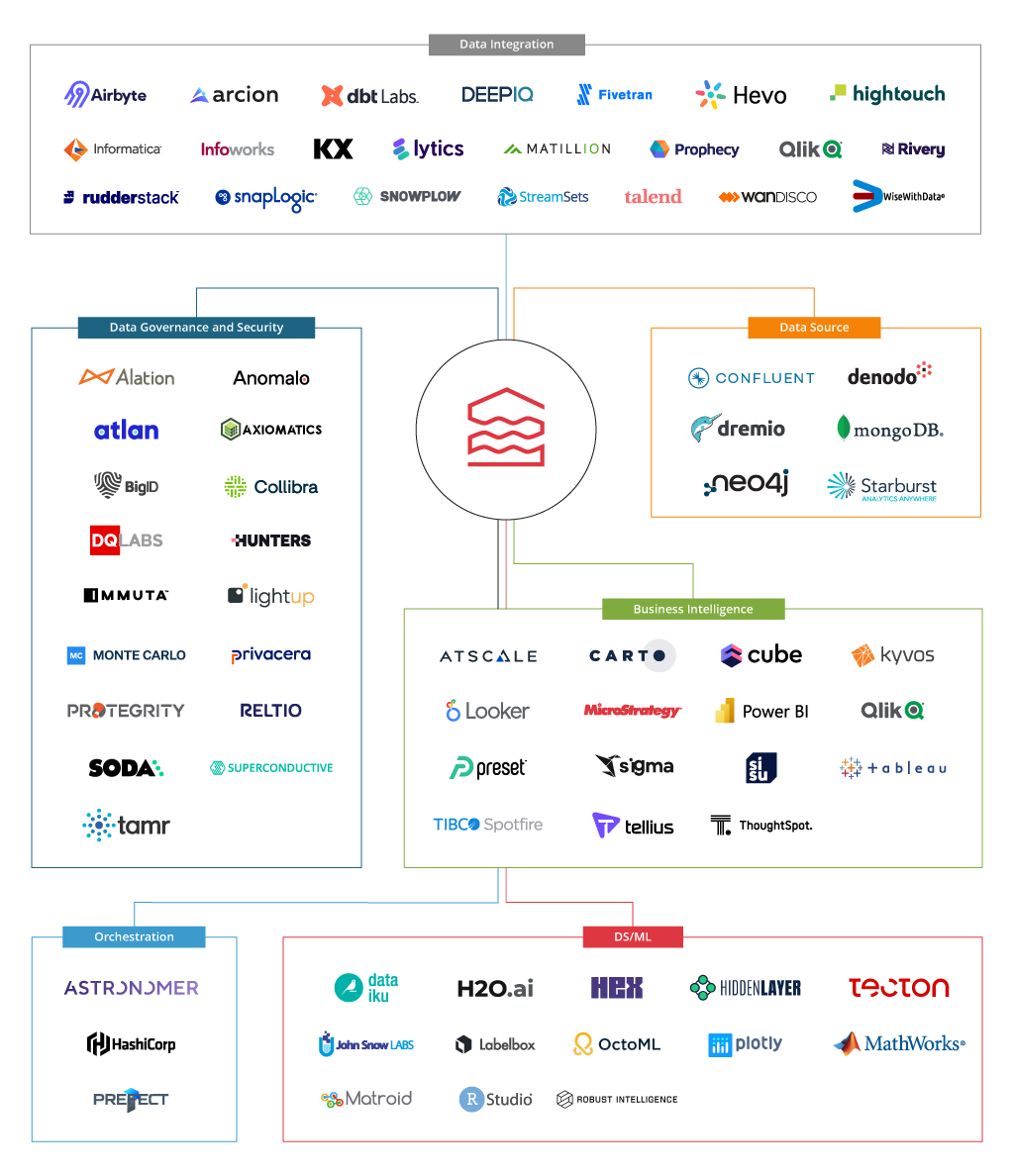

Powerful Integrations

Databricks can be smoothly integrated with industry-leading tools to enable additional capabilities around data ingestion, ETL, data governance, business intelligence, and ML. This means you can perform all data-related operations in one place — no need to switch among multiple applications.

If your industry-specific tasks require more sophisticated functionality not covered by Databricks’ partners, team up with an experienced data analytics company to add custom modules to your platform.

Interoperability

Databricks does not require transferring data to its proprietary solution. Likewise, you are easily connected to your cloud environment of choice, such as Azure, Google Cloud, or AWS. Databricks’ products are portable, which means you can easily go the multi-cloud way, avoiding vendor lock-in.

Besides, the platform offers Delta Sharing, an open protocol that enables extremely secure sharing of live data across clouds, platforms, organizations, and regions. Companies are spared the need for data replication and can centrally govern the shared data on one platform, increasing the overall workflow efficiency.

Rock-Solid Security

According to recent stats, 45% of security breaches are cloud-based, with 80% of companies experiencing at least one cloud security incident in the last year. With this in mind, to protect a company’s data and workloads, Databricks implements comprehensive security mechanisms:

- On top of encrypting data at rest in the control plane through cryptographically secure techniques, Databricks also offers protection with customer-managed keys (CMK).

- Private Link is used to set up end-to-end private networking for the Databricks platform, minimizing accidental firewall misconfiguration and attacker-ruled traffic inspection risks.

- Serverless Security: Serverless workloads are executed within multiple layers of isolation that can be tested both in-house and by external penetration testing companies.

- Enhanced Security and Compliance Add-On includes enhanced security monitoring (behavioral-based malware and file integrity monitoring, hardened images tools, vulnerability reporting) and Compliance Security Profile (FIPS 140-2 encryption, automatic cluster update, AWS Nitro VM enforcement).

Cloud-Native Nature

The Databricks platform is available on top of your existing cloud, whether it is AWS, Microsoft Azure, or Google Cloud. No matter if you choose one cloud provider or leverage a multi-cloud approach, you get access to all the necessary resources:

- Storage. Databricks stores data in native clouds: in AWS it is S3, in Azure — Azure Data Lake Storage Gen2, in Google Cloud — Google Cloud Storage.

- Compute clusters. In AWS, Databricks uses EC2 virtual machines, in Azure — Azure VMs, in Google Cloud — Google Kubernetes Engine.

- Networking and security. Databricks integrates with the company’s existing networks, access management, and storing secrets to ensure the integrity and privacy of data.

The microservices-based nature of the cloud gives you more resilience and reliability — meaning that in case of an incident you can isolate particular modules to minimize the impact on the entire application. By operating on a cloud-native infrastructure, you can seamlessly switch between providers, in turn optimizing costs, leveraging the best features, and eliminating vendor lock-in.

End-to-End ML Support

Databricks positions enterprise reliability, scale, and security to empower the whole ML lifecycle — from rapid iteration to large-scale application production. With its in-built MLflow tool, you can:

- Discover and share new ML models

- Manage and deploy large language models (LLMs)

- Run experiments with any ML library, language, or framework, and share the results

- Develop and deploy production-ready models

- Monitor ML deployments and their performance

- Test ML models and integrate them with approval and governance workflows

- Share the obtained expertise with peers through the MLflow Model Registry.

Conclusion

Once you have decided to leverage all Databricks’ capabilities and benefits to enhance your business operations, mind the fact that its implementation is not an easy process. To make things right, taking into account all technical peculiarities and ensuring business continuity, you will need expert help from a data analytics company like Infopulse.

![Generative AI and Power BI [thumbnail]](/uploads/media/thumbnail-280x222-generative-AI-and-Power-BI-a-powerful.webp)

![Data Governance in Healthcare [thumbnail]](/uploads/media/blog-post-data-governance-in-healthcare_280x222.webp)

![AI for Risk Assessment in Insurance [thumbnail]](/uploads/media/aI-enabled-risk-assessment_280x222.webp)

![IoT Energy Management Solutions [thumbnail]](/uploads/media/thumbnail-280x222-iot-energy-management-benefits-use-сases-and-сhallenges.webp)

![Carbon Management Challenges and Solutions [thumbnail]](/uploads/media/thumbnail-280x222-carbon-management-3-challenges-and-solutions-to-prepare-for-a-sustainable-future.webp)

![Automated Machine Data Collection for Manufacturing [Thumbnail]](/uploads/media/thumbnail-280x222-how-to-set-up-automated-machine-data-collection-for-manufacturing.webp)